Selenium in blood serum

Atomic absorption spectroscopy for trace analysis

Blood or serum analysis can help in the diagnosis of numerous diseases and is also used to investigate possible excessive or insufficient supplementation with vitamins or trace elements. Blood serum is a difficult analytical matrix since it contains many different components, and serum analysis is therefore a major challenge. Atomic absorption spectroscopy offers a reliable and robust analysis method.

Instrumental analysis is an important diagnostic tool in the analysis of blood or blood serum. Many causes of disease can be determined from blood composition and can be treated accordingly. In addition to its many main components, blood also contains substances that the human body needs only in minute amounts. One of these substances is selenium.

Selenium is an essential trace element for the human organism. This means that the body only needs selenium in trace amounts. The selenium concentration in serum lies in the range of about 50 – 120 µg/L. Selenium deficiency occurs below this range and may cause a variety of problems. An excess of selenium is, however, toxic. The margin between selenium deficiency and a toxic concentration is very narrow. It is therefore important to determine the selenium concentration in blood and to monitor any additional selenium intake closely.

How atomic absorption spectroscopy works

Atomic absorption spectroscopy (AAS) with graphite furnace has been proven to be successful in the analysis of trace elements in serum. The advantages of this system are the high detection sensitivity (measuring range in the lower µg/L range), small sample volume required (10 – 20 µL) and the relatively low sensitivity to difficult matrices.

In order to operate an atomic absorption spectrometer, special lamps are required that contain the specific element to be analyzed – selenium determination therefore requires a selenium lamp. The selenium in the lamp is excited in such a way that it emits selenium-specific light.

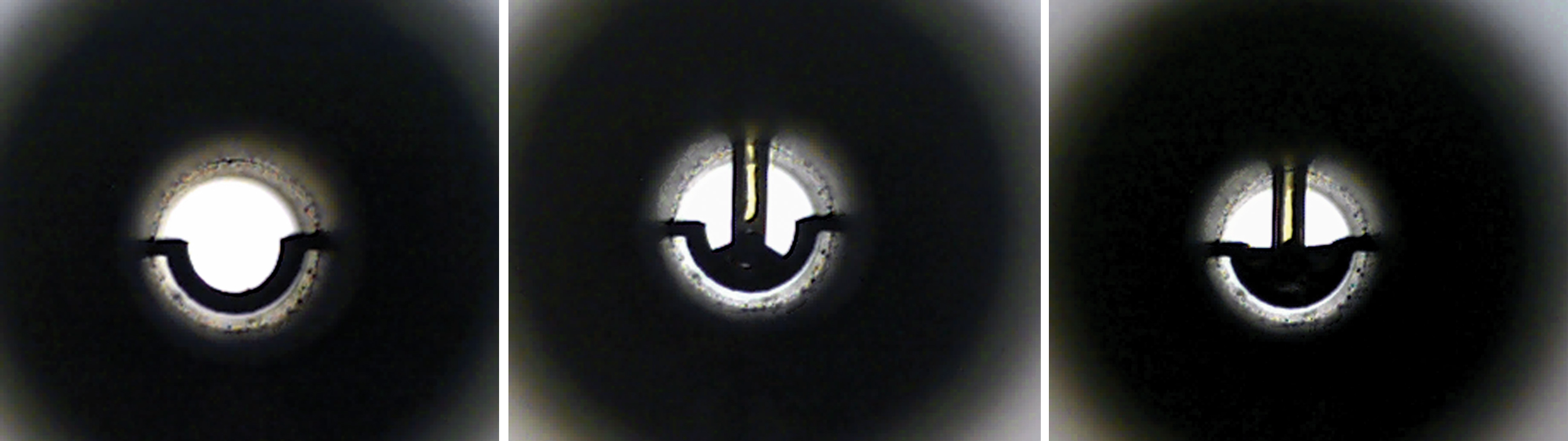

Figure 1: The images show a graphite tube in the optical beam before and during the injection (total of 25 µL). It is important that the sample is carefully and ‘reproducibly’ placed in the tube.

Figure 1: The images show a graphite tube in the optical beam before and during the injection (total of 25 µL). It is important that the sample is carefully and ‘reproducibly’ placed in the tube.

The element-specific light is directed via a special mirrors through an optical path in which a so-called atomization unit is placed. In trace analysis, a graphite furnace atomization unit is used. This furnace includes a tube of about 3 cm in length made of compressed graphite. After passing through the graphite tube, the element-specific light is directed by other optical parts onto a photomultiplier which measures the incident light.

For the analysis, an aliquot of the sample (approx. 20 µL) is injected into the graphite tube and heated electrothermally. First, the solvent is evaporated and the remaining sample components are ashed. In a so-called atomization step, the sample is subsequently irradiated with enough energy to convert the sample components into many free excitable ground-state atoms of the respective element. In the ground state, atoms absorb energy from light emitted by the lamp. The system measures the light intensity attenuation (absorption) during the atomization phase (atomic absorption).

During atomization, temperatures of up to 3,000 °C are generated. To protect the graphite tube from oxidation (by atmospheric oxygen), a small continuous stream of argon is directed through the tube. The argon gas flow also focuses the resulting atomic cloud. The measuring range for selenium lies at about 2 µg/L to 25 µg/L (LOD < 0.5 µg/L) for sensitive systems (AA-7000G).

Various graphite tubes are available for graphite-furnace AAS. For serum analysis, graphite tubes with an omega-shaped platform have proven to be successful as they heat the sample uniformly.

Sample preparation

In addition to water (> 90 %) and soluble substances that occur naturally in blood, serum contains mainly proteins (about 7 %). Because of the high viscosity and the organic matrix (proteins), the serum sample is diluted at a ratio of 1:10 with ultrapure water. To further reduce the viscosity of the diluted sample, a surfactant (Triton X) is added to the dilution. This dilution can be placed directly in an autosampler vial for subsequent analysis. Due to the dilution factor (factor 10), the working range of this analysis is about 20 µg – 250 µg per liter of serum.

Temperature program

Selective, straightforward and sensitive analysis using graphite-tube AAS requires an adapted and optimized temperature program. First, the water must be evaporated slowly without splashing the sample in the graphite tube. The interfering organic substances are then ashed at temperatures up to 1,100 °C.

Selenium has a boiling point of 685 °C, and suitable measures must be taken to prevent lower measurement values. Injecting a small amount of palladium nitrate solution will suffice for this purpose. This can be programmed and carried out automatically via an autosampler.

The palladium modifies the selenium in such a way that it will only evaporate at higher temperatures and is therefore not volatile at higher drying temperatures such as 1,100 °C. The atomization step then takes place at 2,500 °C and needs just a few seconds. Absorption measurement also takes place during this time span.

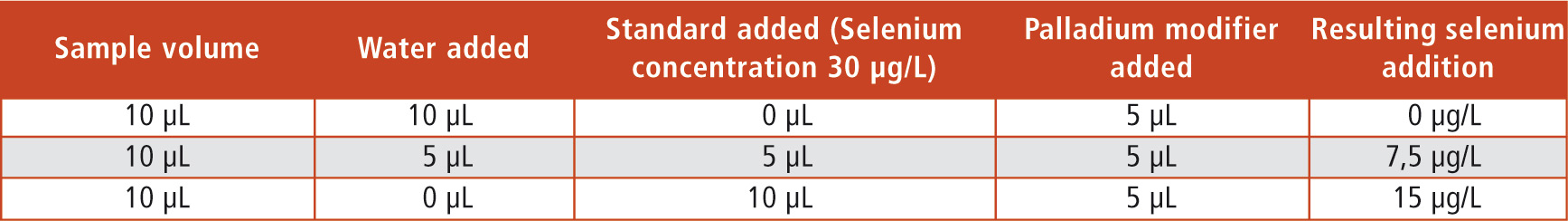

Table 1: Composition of the sample injection for the individual measuring points and the resulting concentrations of the selenium addition

Table 1: Composition of the sample injection for the individual measuring points and the resulting concentrations of the selenium addition

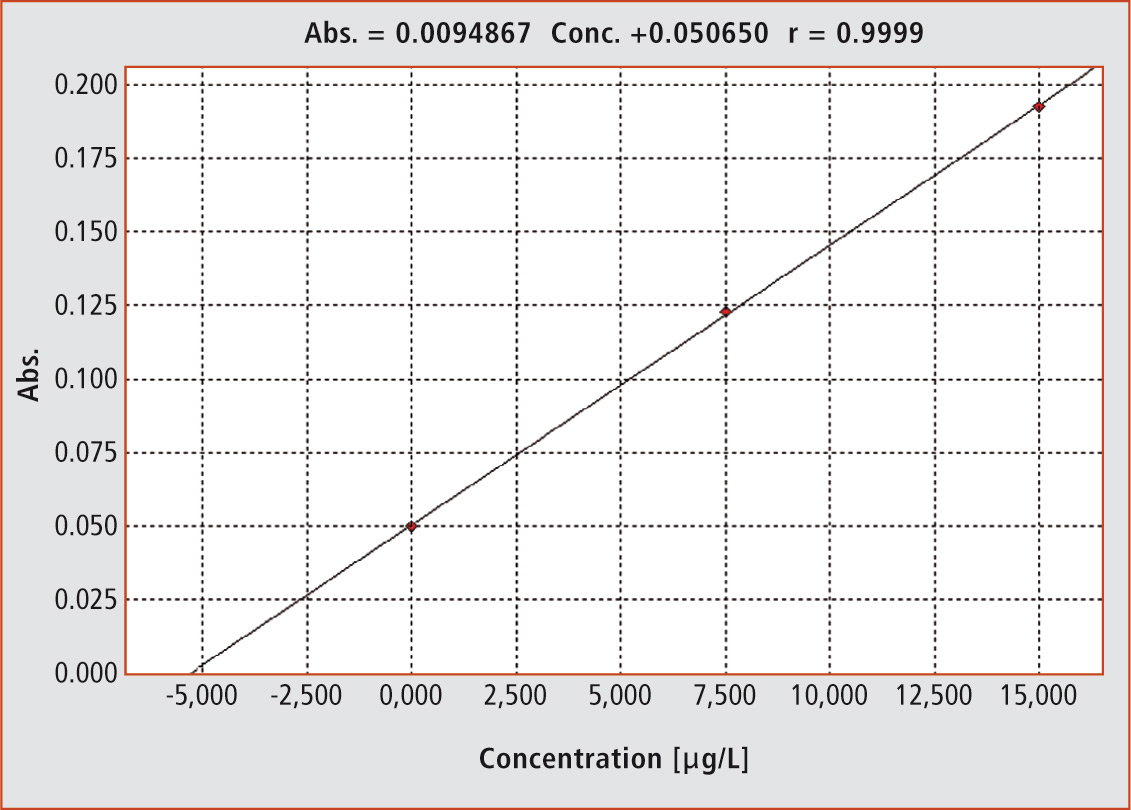

Figure 2: The graph shows the evaluation function according to the standard addition method (r = 0.9999)

Figure 2: The graph shows the evaluation function according to the standard addition method (r = 0.9999)

Calibration

In graphite-furnace AAS, absorption is recorded along the time-axis during atomization. This results in a peak, whose height or area is used for calibration as a measure of concentration. For evaluation, external calibrations are typically used.

For difficult matrices such as blood serum, the standard addition method has become an established evaluation method. The sample is mixed with a standard solution in various ways and measured afterwards (Table 1, Figure 2). In this way, sample measurements in different concentration levels are obtained in which the individual matrix interferences of the sample are optimally taken into account.

When the measurement parameters (height or area) are plotted against the added selenium concentrations, the graph shows the sample concentration on the negative x-axis. In this way, each sample obtains its own internal evaluation function. The injection sequence is fully programmable and can be carried out using an autosampler. The user only has to fill the sample vials and provide the standard addition solution and the modifier. Evaluation of the results also takes place automatically.

Measurement example

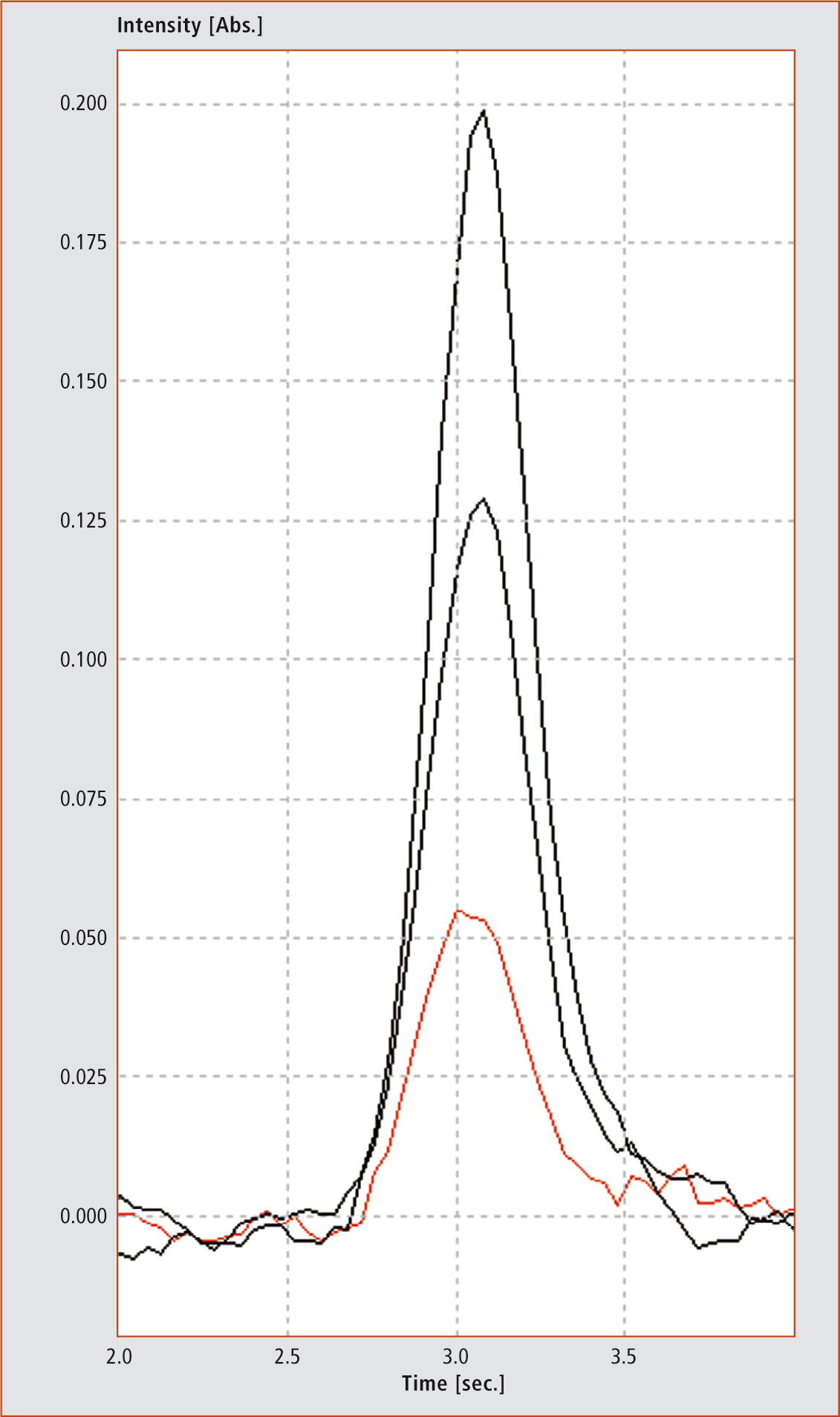

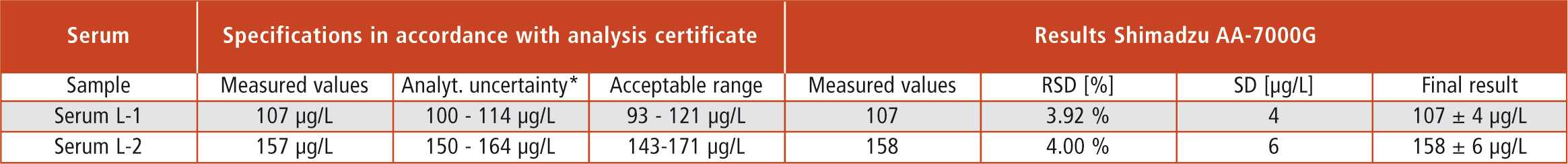

Two certified sera with different concentration were tested for method development. The results and the standard deviation were obtained after three injections for each concentration level (Figure 3, Table 2).

Figure 3: Peaks of three injections (red = sample without addition, black = both injections with different amounts of selenium). The y-axis shows the absorption versus time in seconds (x-axis).

Figure 3: Peaks of three injections (red = sample without addition, black = both injections with different amounts of selenium). The y-axis shows the absorption versus time in seconds (x-axis).

Table 2: Certified reference sample concentrations (Sero, Norway) and related statistical data for results recovery (confidence interval/acceptance range) as well as analytical results of the AA-7000G (*95 % confidence interval)

Table 2: Certified reference sample concentrations (Sero, Norway) and related statistical data for results recovery (confidence interval/acceptance range) as well as analytical results of the AA-7000G (*95 % confidence interval)

Conclusion

It is anything but easy to analyze trace elements in difficult matrices such as blood serum. A robust measurement system with an optimized furnace program, appropriate equipment and a suitable data evaluation method can make such specialized analytics available for routine analyses.

Read for you

in G.I.T. Labor-Fachzeitschrift 8/2014